Features

Performance, scalability, and dependability

Rivos delivers a differentiated approach to these challenges, offering secure, scalable, and energy-efficient Data Center solutions that can be tailored to meet a business’s needs, whether air- or liquid-cooled.

All our solutions are built on a full standard software stack, adapted to Rivos hardware. This open-source based platform offers upstream support for Rivos hardware across popular APIs and frameworks. Paired with the Rivos SDK and integrated libraries, it delivers a seamless, out-of-the-box experience for developers and enterprises alike.

Explore our range of solutions designed to meet the diverse needs of today’s AI-driven enterprises—balancing performance, scalability, and dependability at every level.

Air-cooled Scalable Server Solutions

Liquid-cooling has become the standard for high-density AI server racks and is fully supported by Rivos solutions. However, many organizations have existing infrastructure that requires use of air-cooling. Rivos has ensured air-cooled platform solutions are available to bring AI benefits to these organizations.

Rivos also supports the traditional solutions with an x86 host, as well as the more optimized self-hosted AI appliance configurations.

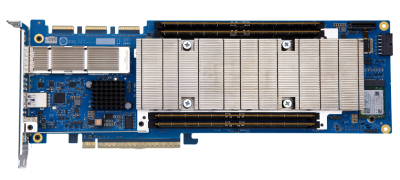

PCIe card |  Universal Baseboard(UBB) | Self Hosted 3U Server |

| PCIe CEM card fits with existing server GPU slots Provides accelerated compute to x86/Arm servers with the option of using multiple cards | Standard AI server for standalone or rack cluster use UBB Style – dual x86 hosted base system with PCIe Gen 6 links to 8 Rivos GPGPU accelerators | Self-hosted AI appliance server with full network and management support Can be part of a managed AI cluster |

| Cooled using the existing server airflow Use in servers with 1, 2, 4, or 8 PCIe slots for “GPU” | Full height air cooled configuration Reduced height liquid cooled configuration | 3U air cooled for existing Data Center infrastructure 1U direct liquid cooling for dense AI compute racks |

| Ultra Ethernet port included for external scale-out, removing need for additional NICs on the system side Direct Ultra Ethernet links used to internally connect the cards | Each GPGPU has external 800G Ultra Ethernet connection Fully connected Ultra Ethernet internal mesh between the GPGPUs | External 800GE Ultra Ethernet AI backside network OCP3 NIC frontside net |