How AI is changing the rules for Software and Hardware design

21 November 2024

Puneet Kumar, CEO and Co-founder of Rivos Inc

As business leaders, we are navigating an era where Artificial Intelligence (AI) has rapidly moved from promise to reality, impacting nearly every sector. The speed of adoption has been unprecedented, with applications like ChatGPT shattering growth records by reaching 100 million users in just three months. This wave is redefining the way we think about both hardware and software, and ultimately, the foundations of how we innovate and grow.

This transformation calls for both chip providers and end-users to fundamentally rethink strategies for AI servers. The traditional hierarchy between software and hardware has shifted; now, it’s software that dictates the pace, requiring hardware to continuously adapt to meet new demands.

To keep pace with this rapid design cycle of hardware for AI, this post enumerates key areas that are essential in developing adaptable, high-performance servers.

Rethinking hardware development cycles: Aligning with AI’s speed

AI applications, especially in the realm of Generative AI, require massive computational power. Traditionally, server hardware followed development cycles of 18 to 24 months. However, the rapid acceleration of the AI market has compressed this cadence to just 12 months, leaving less time for hardware innovations to be developed, implemented, and tested between generations.

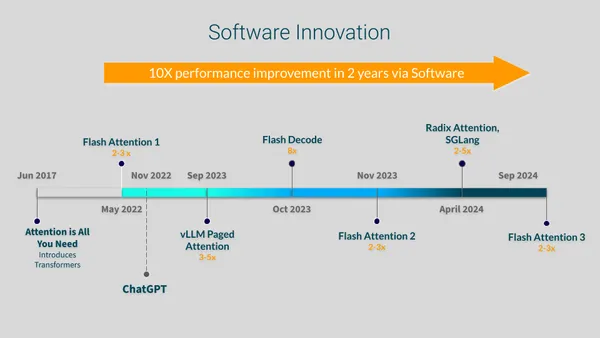

Meanwhile, on the software side, advancements like optimized Attention mechanisms have demonstrated performance gains of up to 10x within a two-year span. This contrast underscores a new reality: software is advancing at a staggering rate, setting an aggressive pace for hardware to keep up.

The practical upshot of this is an increasing need for software-defined hardware: hardware super-optimized for the most critical software tasks.

Shifting customer expectations: Embracing flexibility and versatility

Today’s customers demand versatile systems that offer flexibility to support both legacy models and the latest innovations. They expect infrastructure that can adapt seamlessly to evolving workloads, providing a future-ready foundation for the performance requirements of diverse AI applications.

The AI-driven market is inherently dynamic, requiring support for a wide range of frameworks, serving infrastructures and languages including — PyTorch, TensorFlow, JAX, Triton, Onnx, OpenVINO, and vLLM. Model development is continuing at a high pace, with even the “established” Transformer mechanism being displaced in the Mamba model.

Software-defined hardware enables the development of targeted solutions optimized for specific algorithms or algorithm families. In domains like embedded and wearable systems, this approach provides the short-term efficiency needed and often serves as an ideal solution. However, these specialized designs fall short of meeting the broader, more dynamic requirements of customers in the Server AI space, where flexibility and adaptability across diverse workloads are paramount.

When selecting new hardware, customers require:

- (a) Existing models to run efficiently

- (b) New and future models are supported quickly and are performant

- (c) Any framework they choose is supported

The specialized approaches only cover (a). A programmable approach is needed to support (b) and (c).

How can software-defined hardware techniques be applied to enable Server AI acceleration?

The approach parallels what we see in traditional CPU design, but adapted to the needs of AI workloads. The existing programming model must be preserved to make the hardware intuitive and minimize porting efforts. The hardware designers can then analyze the extensive existing codebase to optimize the programmable engine. Additional gains are made by incorporating hardware features that super-optimize critical or anticipated tasks, while unnecessary functions are removed (or shifted to software implementations for backward compatibility) to reduce power usage and silicon area. Unlike traditional CPUs, however, these algorithms are designed specifically for highly parallel, multi-threaded processing.

Historically, general-purpose parallel computations have been executed on GPUs, and software-defined hardware therefore points toward a General Purpose GPU (GPGPU) model, specialized for AI workloads. Practically speaking, much of today’s cutting-edge AI research, including the advancements in Attention models mentioned above, is developed on GPGPUs.

Leveraging the power of Open Source: Enabling collaborative innovation

Being future-ready means not only addressing current customer needs but also anticipating and responding to rapid changes. A robust software and hardware co-design strategy, supported by the agility of open source technology, offers the flexibility to adapt to these shifts quickly and effectively. In addition it allows organizations to establish partnerships across multiple players in an ecosystem, creating a system where resources can be shared, modified, and customized on demand. The same is true of open standards. The combination of open source with open standards avoids the risk of being locked into single vendors.

Open source technology has proven invaluable for driving innovation through collective intelligence, reducing development costs, and speeding up time-to-market. The collaborative nature of open source initiatives allows companies to leverage shared knowledge, avoid duplication of efforts, and to quickly build upon the advances of others. Therefore it is vital to invest in partnerships, and ensure that solutions are transparent, modifiable, and adaptable to future needs.

Note that most of these open source benefits come from the collaborative nature of the community. On its own, providing sources does enable visibility of the technology used, but this is not where the power lies. The true value comes from collaborating and providing hardware specific source code into existing projects, especially ones maintained and audited by a cross-organization community of developers. AI Servers for general deployment should fit within an open source stack and use open standards to maximize their impact.

Future-proofing with software driven innovation

Server AI chip designers should follow a software-defined hardware approach and produce GPGPU architecture designs that support the open source and open standards community. This will enable the hardware to grow and evolve with the speed of software innovation.

End users of Server AI systems should be attracted to vendors who follow this approach and are seen to be active in the open source community. This will enable:

- Optimized investments. The ease of programmability allows the hardware to run existing code efficiently while adapting at the cadence of software advances. This makes the server immediately useful, while extending its useful life.

- Agility in adoption. Use of open source and open standards prevents vendor lock-in and enables differentiation across functionality, price and performance. Users can adopt the technology that works best for their application and datacenter needs.

- Sustainable growth. Targeting efficiency and lower power consumption enables a reduced operating cost while the extended lifetime from the programmability spreads the capital costs. Open standards decouple upgrades of different parts of the whole system. Scaling AI solutions therefore becomes manageable and sustainable.

To succeed in the AI-driven landscape, leaders must prioritize adaptable, scalable, and future-ready AI Server solutions. By embracing software-defined, GPGPU-style architectures, and open standards, organizations can ensure flexibility, efficiency and seamless integration.